Where AI meets engineering excellence: what we’re learning at Nine

Written by Clint Brown, Engineering Practice Lead

After two years of using AI coding assistants, Nine has learned that these tools amplify existing engineering practices. Teams with strong release processes, automated testing and collaborative code review extract maximum value from AI, while outlier teams require targeted interventions. Quality concerns are proving mostly unfounded with AI users showing more consistent development patterns. Value is emerging in areas like software upgrades, modernisation and security vulnerability resolution.

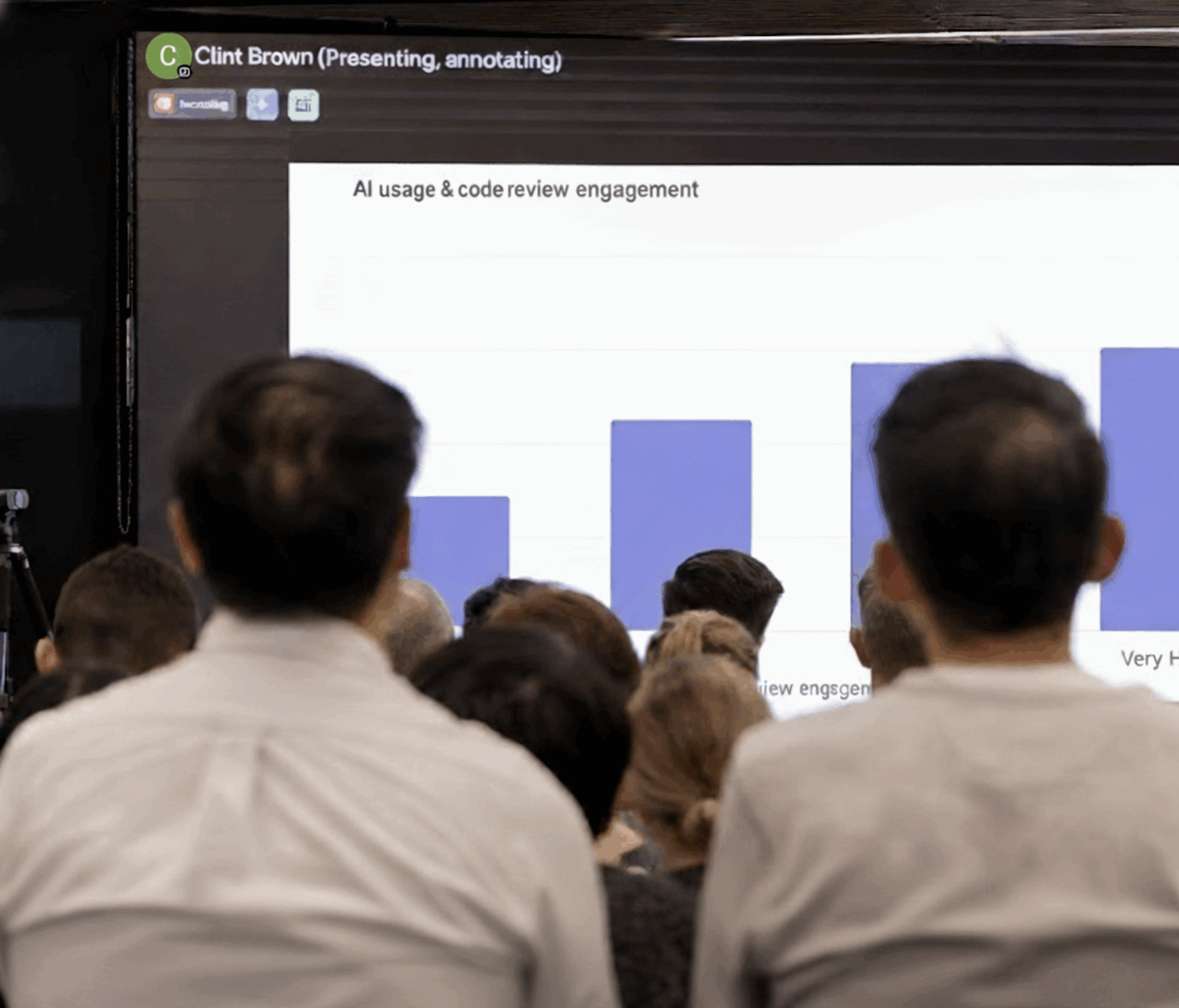

From data to decisions: consolidating engineering metrics

We recently consolidated our engineering metrics reporting into a single developer insights platform, which aggregates data from core platforms to provide a holistic view across the entire Digital team. It also enables staff to give vital feedback about areas for improvement — our first quarterly survey achieved a response rate of over 99% from Digital staff. With this complete view across Digital, we can distinguish between issues that affect all teams and those that affect only individual teams, allowing us to apply different targeted strategies to resolve them.

The platform measures our utilisation of AI tools and their impact on key areas such as work allocation, quality and velocity. Nine has been tracking adoption of AI coding assistants through daily and weekly active users. As adoption has grown, our main comparison groups have shifted from AI / non-AI users to heavy / moderate / light users of AI tools. We are also tracking pull requests authored by autonomous coding agents to measure their merge rate, time to merge and the extent of human involvement.

These metrics revealed a critical insight: AI velocity gains correlate strongly with specific engineering practices.

Engineering practices that unlock AI‑driven velocity

Our teams that turn AI into real velocity gains share these engineering practices in common –

- Releasing small changes frequently.

- Sharing responsibility for code review.

- Automatically creating a live preview server for pull requests to enable review and collaboration prior to merge.

- Running automated tests in all deployment environments.

- Automatically deploying (with testing gates) through to production when a pull request is merged.

Outlier teams benefiting less from AI require different interventions. High velocity teams with low AI usage may represent a missed opportunity — let them hear more from peers having success with AI in showcases, knowledge sharing sessions or peer mentorship. Other teams may need to remove unnecessary friction and improve their confidence in releasing code to support more pull requests from AI tools.

These practices not only enable AI velocity gains — they also help us address one of the most common concerns about AI-generated code: quality.

AI code slop: rhetoric vs reality

Much has been made of ‘AI code slop’ — the tendency of LLMs to produce large amounts of low-quality code. Although we have observed a moderate increase in pull request size (lines of code) over time, our frequent users of AI coding assistants actually produce smaller, more consistently sized PRs than infrequent or non-AI users. This suggests AI coding assistants may help create more stable and predictable development patterns regardless of task complexity. On the overall increase: while AI coding tools may contribute by generating more robust features, test cases and documentation, they can also cause harm by duplicating instead of reusing existing code when not given clear guidance. Human review remains essential to evaluate AI output carefully and to keep pull requests small for peers to easily review. As engineers increase their AI usage, time savings are also reinvested back into code review which improves comprehension, increases change confidence and reduces failures.

Among other code quality indicators we track, self-reported change failure rates and maintainability scores show little impact from AI, with only small isolated increases in PR revert rates observed.

Under-the-radar areas where AI delivers value

As coding agents have improved at maintaining focus on long-running tasks, we’re also assigning them greater responsibility. We’ve used agents for software upgrades and migrations; early efforts in Next.js and React projects have delivered good output and significant time savings reducing effort from weeks to days. Legacy modernisation is another area where agents can help us uncover the actual behaviour of existing systems and assist in translating code to modern languages. While concerns about AI coding tools introducing vulnerabilities are well-founded, LLMs also possess broad knowledge of common security vulnerabilities and patterns. We’ve successfully used coding agents to resolve CVEs in dependencies and fix multiple types of vulnerabilities in our code.

Beyond code generation, our engineers self-report using AI coding assistants for a wide variety of tasks, including conducting research, understanding internal and external systems, and assisting human-led solution design and task planning. We also use AI heavily for debugging including log diagnosis and code logic analysis. When deciding how to measure AI’s impact, choose a set of metrics that reflects where AI may add value across the full software development lifecycle. Senior leadership can anchor on high-level business outcomes — spend efficiency, capacity saved and waste reduction — while engineering teams zero in on the drivers of those outcomes such as velocity, code review, quality and developer flow.